On 10 June 2018, our Training Director, Professor Joel Lee, published a blog post on the Kluwer Mediation Blog entitled "A Neuro-Linguist's Toolbox - Rapport: Representational Systems (Part 2)". His blog post is reproduced in full below.

A Brief Recap

This entry is an ongoing series focused on using Neuro-Linguistic Programming in our practice of amicable dispute resolution. For ease of reference and the convenience of readers, I will list in this and subsequent entries the series and links to it.

- A Neuro-Linguist's Toolbox - A Starting Point and Building Rapport

- A Neuro-Linguist's Toolbox - Rapport: Non-Verbal Behaviours

- A Neuro-Linguist's Toolbox - Rapport: Representational Systems (Part 1)

In the third part of this series, we focused on how one could build rapport using representational systems by identifying the predicates that were in use by the counterpart.

In this fourth part in the series, I would like to focus on other ways one can identify the representational systems that are in use at that point in time.

We will look at each of the visual, auditory, kinesthetic and digital representation systems in turn. For each of these, we will look at four aspects (where relevant and appropriate) that will provide us clues to the representational system that is in play. These 4 aspects are posture, gestures, rate of speech and breath. One quick caveat, these 4 aspects are systemic and should not be looked at in isolation. Instead, we need to assess them wholistically.

Visual Processing

Someone who is processing visually tends to have an upright posture. They will hold their bodies straight, their heads tilted slight upwards and while they may not stand still, their bodies or feet will move very little. Sometimes it may seem as if they were looking at something in the air in front of them. And in a sense, they are looking at the pictures inside their mind space.

To emphasize this, when talking, their hand gestures often occur above chest height, sometimes head height. These gestures may be animated and seem to be pointing to or illustrating things in the air. This makes sense if they are trying to point out to you the things they are seeing inside their mind.

Because of their posture, someone processing visually will exhibit shallow breathing, usually in the top third of the chest. This is commonly detected by the marked rise and fall of the shoulders.

This will in turn affect their rate of speech. They will tend to speak quite quickly and often at a higher pitch (relative to their normal pitch register). Again, this makes sense if you imagine that they have many images or a very clear image and they are trying to put into words what they see.

Auditory Processing

Someone who is processing auditorily tends to have a relaxed posture. Not too upright nor hunched. Their heads may nod, bob or move from side to side, and their bodies may also shift from side to side almost as if they were moving to a beat inside their head, And in a sense, they are.

Their hand gestures will be at chest height or mid-torso and will generally move rhythmically and often in beat, and to emphasise the impact of what is being said.

Breathing is often at the mid-chest although this is often hard to detect. Their speech tends to be rhythmic, their pitch variable and can sometimes seem sing-song.

Kinesthetic Processing

Someone who is processing kinesthetically, tends to have a hunched posture and their heads are often angled downwards. They may also move very little, almost as if their bodies were very heavy.

Their hands gestures, if any, are often at the lower torso and are generally not animated. Again, they might move as if they were very heavy.

Someone processing kinesthetically will breathe deeply and fully. Sometimes, it may even seem as if they were sighing. This in turn affects their speech. They will speak relatively slowly and likely to be at a lower pitch (relative to their normal pitch register). It will almost be as if they were feeling and weighing everything in order to process it.

Digital Processing

Someone who is processing digitally tends to have an upright posture with a relatively unmoving body. Their head is often tilted to the side as if listening to something and they will have minimal hand gestures. However, they may cross their arms when they speak or position a hand to their chin or the side of their head, almost as if they were listening to a telephone.

Someone who is processing digitally will manifest variable rates of speech, pitch and breathing. However, their choice of words will almost always be measured and precise. These will be the wordsmiths of the world.

So these non-verbal c(l)ues provide us additional ways to identify the representational system that is currently in operation. It is important to point out that it is more art than science, although there is some science involved. As such, one should be making a judgment call taking into account the predicates and these non-verbal c(l)ues.

Identifying additional indicators of representational systems

One way would be to engage in the past time of people watching. The next time you are part of a group who are having a conversation and you don’t have to speak, listen to and watch the people speaking. Identify their predicates and notice the correlation the predicates have with how they hold their body and head, how much they move, where they breathe, their rate of speech, their pitch and their hand gestures.

As you get more skillful with this, then begin to practice this when having a conversation with someone. Of course, do this in a social, low risk situation until it becomes second nature to you. Then, depending on your assessment of what representational system they are using, match their non-verbals and use predicates from that representational system and notice how it affects your rapport and communication with them. And just for fun, mismatch the non-verbals and predicates and notice how that makes a difference.

Eye-Accessing C(l)ues

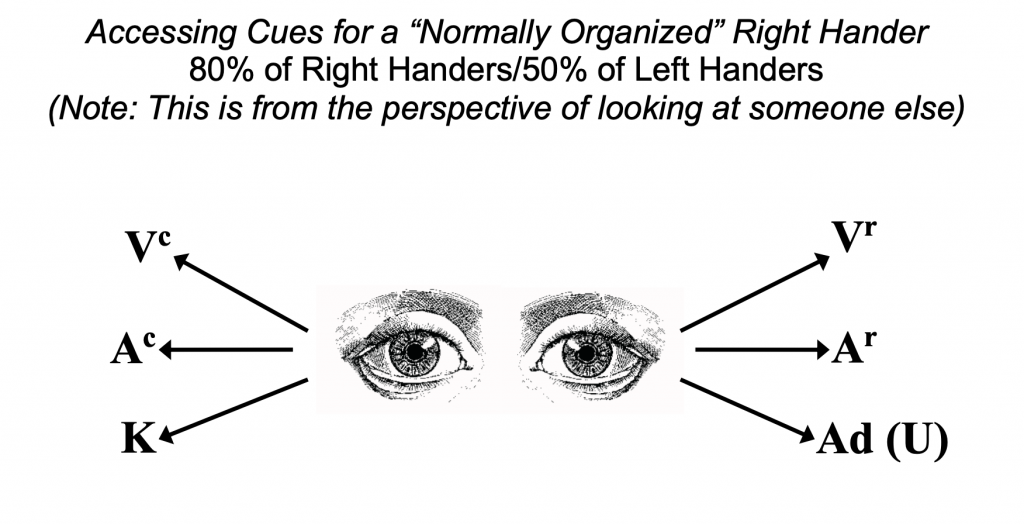

There is one additional non-verbal c(l)ue that I wanted to discuss separately and these are eye-accessing cues. The NLP model of eye-accessing cues postulates that one’s eyes will move in certain directions to access certain types of information. According to this model, when someone is accessing the visual representational system, their eyes will move up and to the right and/or left. When someone is accessing the auditory representation system, their eyes will move horizontally to the right and/or left.

Before looking at kinesthetic and digital accessing, it is useful at this point to note that the NLP eye-accessing cue model is a little more complex than what has just been represented. For example, the model makes a distinction between whether an access is remembered or constructed. So, if the person’s eyes go up and to their left, for most people (80% of right handers and 50% of left handers) this means that they are accessing a remembered image. A question like “What is the colour of your living room” might prompt that this access. If the person’s eyes go up and to their right, in most people, this means they are constructing an image. A question like “What would your living room look like painted with purple polka dots?” might prompt this access.The same applies to auditory access.

Does everyone's eyes move the same way?

Whether the eyes go to their left or right determine whether it is remembered or constructed respectively for most people.

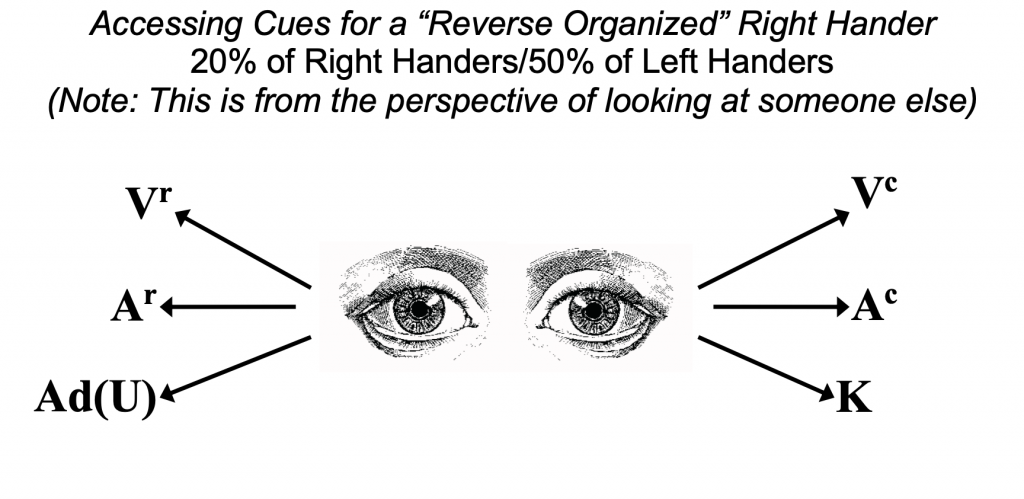

Two points can be made here. First, the reverse is true for the remainder of people (20% of right handers and the other 50% of left handers). In other words, for people who fall into this category, the eyes moving to their left indicate construct and moving to their right indicate remembered.

Secondly, it is important to note that a constructed sound or image does not mean that the person is lying. This is a fallacy that was unfortunately perpetuated by Hollywood.

From a communication perspective, this does not affect us. When we see their eyes move upwards, it is visual access and when their eyes move horizontally, it is auditory.

A Trickier Level looking Down

Having now noted this additional layer of complexity, we can look at kinesthetic and digital access. In most people, when their eyes move down and to their left, they are accessing digitally. Put another way, they are talking to themselves. A question like “Can you recite your favourite nursery rhyme quietly to yourself?” might prompt this access. When their eyes move down and to their right, they are kinesthetically accessing feelings or sensations. A question like “How does your right foot feel right now?” might prompt this access.

Readers will quickly realise that this means that, while moving the eyes to the left and right does not matter for visual and auditory access, it does for kinesthetic and digital. Without having to go into a complex analysis on this matter, three quick solutions present itself.

First, you have the benefit of predicates and other cues to help you come to an educated guess. And this will often resolve the matter. However, on the assumption that it does not, then secondly, go with the averages. Since most people access kinesthetic when going down and to the right, communicate with them kinesthetically and calibrate to their response. If it maintains or improved rapport, then you have probably got it right. If it does not, try a digital response and see what happens.

Finally, if all else fails, go with the digital response. Readers will remember from the third post in this series that the digital system is separate from any particular representational system. The digital system is one of words which is meta to sight, sound or touch. As such, when speaking to someone using the digital system, the listener is free to access whichever representational system that they wish.

How might one practice this?

Like calibrating to the other non-verbal c(l)ues, people watch. Notice where their eyes go when they are listening or when they are being asked a question. Note where their eyes go last before they respond and identify that representational system. Correlate your observation with the predicates they use and the other non-verbal c(l)ues. Again, do this in a social and low risk situation, not in the most important life conversation at least until you gain facility with this.

This brings us to the end of building rapport using representational systems. I trust it has given you new ideas to think about and that you have found it useful.

To learn more about how Neuro-Linguistic Programming can help you, join us on the journey towards solving "The People Puzzle" - Prof Joel's flagship NLP training series. Obtain the blueprint to achieve greater self-awareness, enhance communication with others, and connect with people more effectively.

Unlock the People Puzzle today! 👉 https://peacemakers.sg/the-people-puzzle/